Exploration Software Framework#

Goals#

Familiarize yourself with the capabilities of the Riallto exploration framework for creating NPU applications

Understand the Riallto

%%kernelmagicReview the structure of the Riallto Python package including its most important modules and methods

Find out where to access documentation

Understand the services MLIR-AIE provides to Riallto

References#

Riallto Exploration Framework#

In this section 4, you will learn how to develop applications for the NPU using the Riallto framework.

The Riallto exploration framework has two parts:

JupyterLab

Riallto uses JupyterLab, a browser-based, integrated development environment (IDE). Kernels for the compute tiles are written in C++. Using Riallto, you can use Python to build and test your application from a Jupyter notebook.

AIEtools

These are the compilation tools used to build the Ryzen AI NPU application.

The AIETools tools are Linux based. For Windows laptops, they run in Windows Subsystem for Linux 2 (WSL 2). If you have installed Riallto and are reading this material on your Windows laptop as a Jupyter notebook, WSL 2 should have been already installed and enabled on your system. WSL 2 is not required for the Linux installation. On Linux the AIETools tools are contained within a docker container.

!wsl -d Riallto cat /etc/os-release

NAME="Ubuntu"

VERSION="20.04.3 LTS (Focal Fossa)"

ID=ubuntu

ID_LIKE=debian

PRETTY_NAME="Ubuntu 20.04.3 LTS"

VERSION_ID="20.04"

HOME_URL="https://www.ubuntu.com/"

SUPPORT_URL="https://help.ubuntu.com/"

BUG_REPORT_URL="https://bugs.launchpad.net/ubuntu/"

PRIVACY_POLICY_URL="https://www.ubuntu.com/legal/terms-and-policies/privacy-policy"

VERSION_CODENAME=focal

UBUNTU_CODENAME=focal

The output of this cell should report the OS version where the ‘Riallto’ tools are installed. If you do not see this, or if you installed the Lite version of Riallto, please refer to the Riallto installation instructions to install the Full version of Riallto.

! uname -r

6.10.0-061000rc2-generic

Programming the NPU#

To recap, the Ryzen AI NPU is a dataflow architecture. Data flows through a series of compute nodes within the NPU. Each node is assigned specific processing tasks. Data is directed only to the nodes where it is needed for computation.

A Ryzen AI dataflow application consists of:

the software kernels that run on compute nodes

the NPU graph that defines the tile connectivity and the movement of data between the tiles

To build an application for the NPU, we need to create dataflow programs. A dataflow program effectively translates the application, as described by its dataflow graph, into code that can be compiled into an executable application for the NPU.

There are two main parts to a program that will run on the Ryzen AI NPU

AI engine software kernel(s)#

AI Engine software kernels are the programs that run on the individual AI Engines in a compute tiles. You may choose to run one software kernel per compute tile. You could also distribute the execution of a single kernel across multiple compute tiles. A third option is to run multiple distinct kernels on individual tiles. And, of course, hybrid combinations of all three options are also valid.

The AIEcompiler tools used in Riallto support building C/C++ software kernels for the AI Engine processors. Creating software kernels is similar to developing software for other (embedded) processors. There are special considerations you need to take into account when programming for the AI Engine to take advantage of the special features this processor supports. E.g., VLIW, SIMD, 512-bit data path, fixed-point and floating-point vector support. Notebooks in section 3 introduced these features. In section 4 you will see how to write software kernels to use these features.

NPU graph#

The notebooks in section 3 showed how dataflow graphs are used to represent NPU applications. Dataflow graphs are abstract graphical representations of an application. The NPU graph is similar in concept, but different to the dataflow graphs. The NPU graph is a detailed programmatic description of the connectivity between tiles and how data is moved in the array. It captures how the dataflow graph is mapped to the NPU array to create a mapped graph. It will be compiled into machine code that is used to configure the NPU.

You will see how to write both kernels and graphs and how they are compiled to develop an application to run on the NPU.

Riallto npu Python Package#

The Riallto npu Python package contains:

Methods to call the graph compiler

Methods to call the AI Engine compiler

Application builder

x86 memory buffer allocation

Methods to synchronize data between the CPU and NPU

Reusable graph templates

Special helper methods intended to be used in a Jupyter notebook to:

Visualize the NPU application

Display NPU NumPy arrays as images

Small library of example, reusable, image processing software kernels

ONNX support (covered in section 5)

IPython cell magics#

You can write your kernel in two ways:

By using a code editor to create a

.cor.cppsource file, orBy writing the C/C++ source code in any Jupyter Notebook code cell that is annotated with a special Riallto IPython cell magic.

Jupyter notebooks use the Interactive Python (IPython) interpreter by default. IPython is a standard Python interpreter that has been extended with features to enhance programmer productivity. One of these is called a cell magic. We have defined our own Riallto cell magic called %%kernel.

When a code cell starts with %%kernel, all subsequent code in the cell will no longer be interpreted as regular Python code. Instead, it will be passed as input to the code handler we have written for %%kernel.

You can find more information about the Riallto %%kernel magic by running the following cell.

import npu

?%%kernel

Docstring:

Specify a compute tile C++ kernel and return an npu.build.Kernel object.

This cell magic command allows users to input C++ kernel code within

a Jupyter notebook cell. It then returns a corresponding Kernel object

that can be compiled into an object file for use in a Riallto application.

The Cpp source must return a void type, input and output buffers are specified

as pointer types, as parameters are specified with non-pointer types.

Header files included in the directory where the notebook is are permitted.

Parameters

----------

cell : str

The string content of the cell, expected to be C++ code defining

the kernel.

Returns

-------

Kernel : object

Returns a Kernel object that has the same name as the last function

defined in the cell magic. See npu.build.Kernel.

Examples

--------

In a Jupyter notebook %%kernel cell

void passthrough(uint8_t *in_buffer, uint8_t* out_buffer, uint32_t nbytes) {

for(int i=0; i<nbytes; i++) {

out_buffer[i] = in_buffer[i];

}

}

This will construct a passthrough npu.build.Kernel object that can be used within

a callgraph to construct a complete application.

File: c:\users\shane\appdata\local\riallto\riallto_venv\lib\site-packages\npu\magic.py

Structure of the Riallto NPU package#

The main Riallto Python package is the npu package. The following section gives an overview of the key modules and user methods that are used to build NPU applications in the next notebooks in this section. You can review the npu documentation for more details on the NPU Python package or follow the links for modules below.

npu.build#

Contains modules that will be used to build an application for the NPU.

-

Used to build NPU applications from a high level description specified in the callgraph() method.

AppBuilderbuilds the complete NPU application. This will create an MLIR .aie filemlir.aiefile which is used as input for the MLIR-AIE compiler. When the process finishes, an.xclbinfile and companion.jsonwill be delivered.The

.xclbinfile is a container file that includes multiple binary files which include the NPU configuration and executables for software kernels in the application. The.jsoncontains application metadata that is used in Riallto to display information about the application and visualisations.Selected user methods that will be used in section 4 examples:

build()- builds the applicationcallgraph()- describes how the kernels are connected together and how data flows through themdisplay()- visualize how your application is mapped to the NPU

-

Includes the methods to allocate buffers in external memory and configure the buffers for the NPU.

-

Includes the kernel object that can be used to build a software kernel for an AI Engine processor.

build()calls the AI engine compiler to build the software kernelto_cpp()converts to cpp

You can also readback source code from the kernel object, view the object file and display the kernel.

-

Includes methods for managing read and writes from the interface tile to and from external memory.

npu.lib#

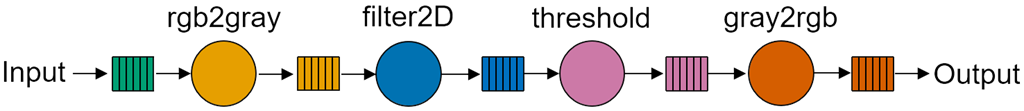

Contains a small library of example applications, and example image processing software kernels. Most of the example applications are used in section 3 and include the color detect, color threshold examples and variations, edge detect and denoise examples.

Software kernels include functions that are used to make up the examples mentioned previously. E.g., rgba2gray() and rgb2hue() are reusable software kernels that do color conversion used in the example applications. Other software kernels (e.g. filter2d(), median() and bitwise functions) are used to process data in the example applications. You can include the provided software kernels in your own custom applications.

For a list of applications and kernels, videoapps() and aiekernels() below in npu.utils to list available applications and kernels or review the npu.lib documentation

npu.utils#

Includes useful utilities for developing applications.

-

Provides information about applications currently running on the NPU. This command can be run standalone

-

Returns a list of software kernels in the Riallto kernel library

-

Returns a list of video application examples available in the Riallto applications library

npu.runtime#

Loads applications from an xclbin file to the NPU, synchronizes data, and visualizes the application.

-

sync_{to|from}_npu- manage data synchronization between the x86 and NPU as they are not cache coherentcall()- configure the NPU and load individual software kernels to the compute tile. After the completion of this method, the application will be running on the NPUdisplay()- displays a visualization of the application mapped to the NPU array

Also included are methods for building widgets to controller the RunTime parameters:

rtpwidgets()- helper function to build user widgetsrtpsliders()- automatically generate slider widgets for any RunTime Parameters

MLIR-AIE#

The MLIR-AIE toolchain is part of what we described above as the AIEtools used by Riallto.

The MLIR-AIE compiler is an open-source research project from the Research and Advanced Development group (RAD) at AMD. This project is primarily intended to support tool builders with convenient low-level access to devices and enable the development of a wide variety of programming models from higher level abstractions.

The input to the MLIR-aie compiler is an MLIR intermediate representation that will be generated by the Riallto framework as an MLIR source file. You will see more on this file format later.

If you would like to explore the capabilities of the NPU beyond the application patterns of this framework, we recommend you use MLIR-AIE directly.

Next Steps#

In the next notebooks, we will guide you through the process of creating custom software kernels using a pre-configured graph to generate an application. Then, we describe how to build your custom dataflow graph by using the Riallto AppBuilder and a set of optimized software kernels from the Riallto library.